mogaze

MoGaze: A Dataset of Full-Body Motions that Includes Workspace Geometry and Eye-Gaze

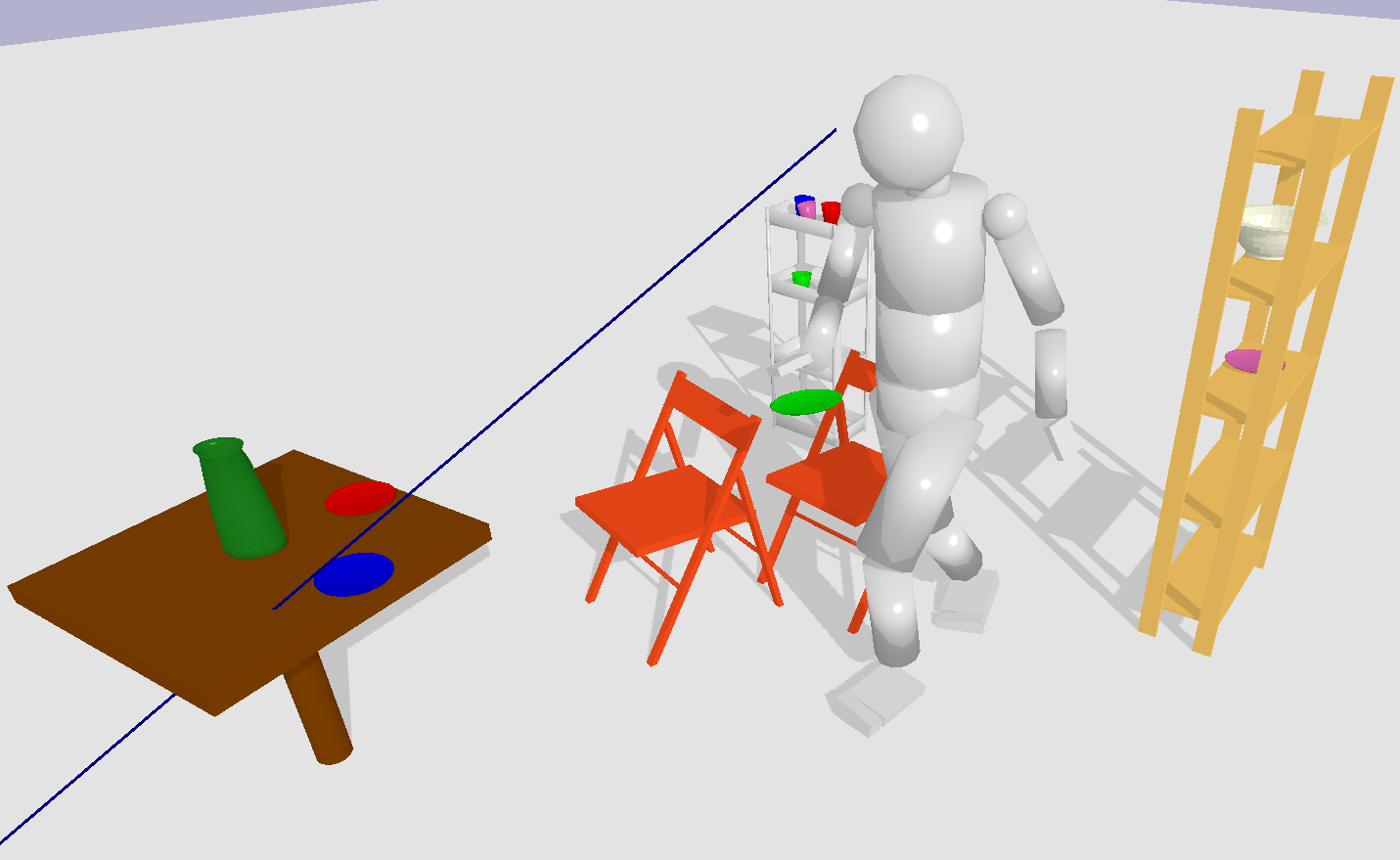

This dataset captures long sequences of full-body everyday manipulation tasks, with eye-gaze.

A detailed description can be found on arxiv.

The motion data was captured using a traditional motion capture system based on reflective markers. We additionally captured eye-gaze using a wearable pupil-tracking device. The dataset can be used for the design and evaluation of full-body motion prediction algorithms. Furthermore, our experiments shows eye-gaze as a powerful predictor of human intent. The dataset includes 180 min of motion capture data with 1627 pick and place actions being performed.

Citation

When using this dataset please mention the following paper in your work

@article{kratzer2020mogaze,

title={MoGaze: A Dataset of Full-Body Motions that Includes Workspace Geometry and Eye-Gaze},

author={Kratzer, Philipp and Bihlmaier, Simon and Balachandra Midlagajni, Niteesh and Prakash, Rohit and Toussaint, Marc and Mainprice, Jim},

journal={IEEE Robotics and Automation Letters (RAL)},

year={2020}

}

Data

All data is available in the single file “data/mogaze.7z”.

Getting Started

You can playback the data using the humoro library.

Here is a tutorial on getting started with it: Getting Started

Visualization

To just visualize the datafiles, you can use the play_traj.py example. For instance, the following command plays the first file of participant one:

python3 examples/playback/play_traj.py mogaze/p1_1_human_data.hdf5 --gaze mogaze/p1_1_gaze_data.hdf5 --obj mogaze/p1_1_object_data.hdf5 --segfile mogaze/p1_1_segmentations.hdf5 --scene mogaze/scene.xml